At our most recent webcast, “Everything You Wanted to Know About Storage But Were Too Proud To Ask: Part Turquoise – Where Does My Data Go?, our panel of experts dove into what really happens when you hit “save” and send your data off. It was an alphabet soup of acronyms as they explained the nuances of and the differences between: Read More

Author: Chad Hintz

The Too Proud to Ask Train Makes Another Stop: Where Does My Data Go?

By now, we at the SNIA Storage Ethernet Storage Forum (ESF) hope you are familiar with (perhaps even a loyal fan of) the “Everything You Wanted To Know About Storage But Were Too Proud To Ask,” popular webcast series. On August 1st, the “Too Proud to Ask” train will make another stop. In this seventh session, “Everything You Wanted to Know About Storage But Were Too Proud To Ask: Turquoise – Where Does My Data Go?, we will take a look into the mysticism and magic of what happens when you send your data off into the wilderness. Once you click “save,” for example, where does it actually go?

When we start to dig deeper beyond the application layer, we often don’t understand what happens behind the scenes. It’s important to understand multiple aspects of the type of storage our data goes to along with their associated benefits and drawbacks as well as some of the protocols used to transport it.

In this webcast we will explain:

- Volatile v Non-Volatile v Persistent Memory

- NVDIMM v RAM v DRAM v SLC v MLC v TLC v NAND v 3D NAND v Flash v SSDs v NVMe

- NVMe (the protocol)

Many people get nervous when they see that many acronyms, but all too often they come up in conversation, and you’re expected to know all of them? Worse, you’re expected to know the differences between them, and the consequences of using them? Even worse, you’re expected to know what happens when you use the wrong one?

We’re here to help.

It’s an ambitious project, but these terms and concepts are at the heart of where compute, networking and storage intersect. Having a good grasp of these concepts ties in with which type of storage networking to use, and how data is actually stored behind the scenes.

Register today to join us for this edition of the “Too Proud To Ask” series, as we work towards making you feel more comfortable in the strange, mystical world of storage. And don’t let pride get in the way of asking any and all questions on this great topic. We will be there on August 1st to answer them!

Update: If you missed the live event, it’s now available on-demand. You can also download the webcast slides.

No Shortage of Container Storage Questions

We covered a lot of ground in out recent SNIA Ethernet Storage Forum webcast, “Current State of Storage in the Container World.” We had a technical discussion on why containers are so compelling, how Docker containers work, persistent shared storage and future considerations for container storage. We received some great questions during the live event, and as promised, here are answers to them all.

Q. Docker cannot be installed on bare metal and requires a base OS to operate upon right?

A. That is correct.

Q. Does the application code need to be changed so that it can “fit and operate” in a container?

A. No, the application code does not need to change. The challenge most people face when migrating an application to a container is how to maintain the application’s state. One of the motivations for this webcast was to explain how to allow applications within containers to persist data. Hopefully the Docker Volume construct will meet your needs.

Q. Seems like containers share one OS/kernel… That suggests that there is just one OS in the “containerized” server… And yet there is still mention of hypervisor (or at least Hyper-V)… Can you clarify? If the containers share an OS, is a hypervisor needed?

A. You are correct, containers are designed to share a single kernel; therefore a hypervisor is not required to run containers. Having said that, VMware and Microsoft both offer options that run a single container in its own virtual machine (running a minimal operating system).

Q. Can the Docker Hub be compared to something like the GitHub?

A. Yes, that is a great analogy. Docker Hub (hub.docker.com) is to container images as GitHub (github.com) is to source code.

Q. What are the differences between the base and the host image?

A. If you’re referring to the webcast slides; the box labeled “Base Image” is the first layer in an image. The box labeled “Host OS” is not a layer, but represents the hosting operating system (kernel) that is shared by the containers.

Q. So there is a separate root per container?

A. In most cases the image will provide a root, therefore each container will have a separate root. This is made possible by a kernel feature called namespaces. Alternatively, Docker does allow you to share a directory between the host operating system and any number of containers though.

Q. If Deduplication is enabled on the storage LUNs, won’t that affect the performance of the containers?

A. Well implemented data reduction features (compression and deduplication) should have little to no effect on performance and should provide significant benefit by reducing the space required to store containers.

Q. Can you please quickly review the concept of copy-on-write with one or two sentences to boil it down?

A. How the copy-on-write works depends on whether the driver is file or block based. For the sake of simplicity, let’s assume a file-based implementation. Since the image layers are read-only, we need an area to store the changes that the container has made. This area is the copy-on-write layer. When a process reads a file that has not been modified, the file is read from one of the read only layers. When that file is modified and needs to be written back to disk, the new file is written to the copy-on-write layer as is the metadata that describes the file. The next time this file is read, it is read from copy-on-write layer. The graph driver is responsible for this functionality and varies by implementation.

Q. Can network locations be used for /data? If yes, how does the Docker Engine manage network authentication for the driver?

A. Yes, network locations can be used. The best practice is to use the Local Volume Driver, where you can pass in the required authentication via the options (see slide 15). Alternatively, the network location can be mounted on the host operating system and exposed to containers (see slides 21 & 22).

Q. Is this where VAAI like primitives would get implemented?

A. VAAI defines several in-band primitives. The Docker Volume plug-in framework is completely out-of-band. There can be some overlap in features though. For example, the XCOPY primitive can be used to offload ‘copy jobs’ to an array. If the vendor chooses to do so, a ‘copy job’ can be offloaded through the Docker Volume plug-in as well. For example, a plug-in might implement a “clone” option that provides this service.

Q. Could you share some details about Kubernetes storage ? Persistent volumes and the difference from Docker volumes? Also, what is your perspective of Flocker?

A. Kubernetes has the concept of persistent storage. This abstraction is also called a volume. In addition, Kubernetes provides a plug-in option as well. The Kubernetes implementation predates the Docker Volume and is currently not compatible.

Q. Comment on mainframe: IBM runs Linux on zSeries, therefore can run Linux Docker containers.

A. Thanks, that’s good to know.

Q. How many operating systems changes on the x86 platform? How many on the mainframe platform? Can x86 architecture run the same code/OS from 40 years ago? Docker on mainframe?

A. The mainframe architecture has been very solid and consistent for many years.

Q. What is a big challenge for storage in container environment?

A. I don’t think storage has a challenge in the container environment. I think, with a properly implemented Docker Volume Plug-in, storage provides a solution to the persistent shared storage need in a container environment.

Q. Do you ever look into RexRay or VMDK storage drivers?

A. Yes, these are both examples of Docker Volume plug-in implementations.

Update: If you missed the live event, it’s now available on-demand. You can also download the webcast slides.

The Current State of Storage in the Container World

It seems like everyone is talking about containers these days, but not everyone is talking about storage – and they should be. The first wave of adoption of container technology was focused on micro services and ephemeral workloads. The next wave of adoption won’t be possible without persistent, shared storage. That’s why the SNIA Ethernet Storage Forum is hosting a live webcast on November 17th, “Current State of Storage in the Container World.” In this webcast, we will provide an overview of Docker containers and the inherent challenge of persistence when containerizing traditional enterprise applications. We will then examine the different storage solutions available for solving these challenges and provide the pros and cons of each. You’ll hear:

- An Overview of Containers

- Quick history, where we are now

- Virtual machines vs. Containers

- How Docker containers work

- Why containers are compelling for customers

- Challenges

- Storage

- Storage Options for Containers

- NAS vs. SAN

- Persistent and non-persistent

- Future Considerations

- Opportunities for future work

This webcast should appeal to those interested in understanding the basics of containers and how it relates to storage used with containers. I encourage you to register today! We hope you can make it on November 17th. And if you’re interested in keeping up with all that SNIA is doing with containers, please sign up for our Containers Opt-In Email list and we’ll be sure to keep you posted.

Update: If you missed the live event, it’s now available on-demand. You can also download the webcast slides.

NFS FAQ – Test Your Knowledge

How would you rate your NFS knowledge? That’s the question Alex McDonald and I asked our audience at our recent live Webcast, “What is NFS?.” From those who considered themselves to be an NFS expert to those who thought NFS was a bit of a mystery, we got some great questions. As promised, here are answers to all of them. If you think of additional questions, please comment in this blog and we’ll get back to you as soon as we can.

Q. I hope you touch on dNFS in your presentation

A. Oracle Direct NFS (dNFS) is a client built into Oracle’s database system that Oracle claims provides faster and more scalable access to NFS servers. As it’s proprietary, SNIA doesn’t really have much to say about it; we’re vendor neutral, and it’s not the only proprietary NFS client out there. But you can read more here if you wish at the Oracle site.

Q. Will you be talking about pNFS?

A. We did a series of NFS presentations that covered pNFS a little while ago. You can find them here.

Q. What is the difference between SMB vs. CIFS? And what is SAMBA? Is it a type of SMB protocol?

A. It’s best explained in this tutorial that covers SMB. Samba is the open source implementation of SMB for Linux. Information on Samba can be found here.

Q. Will you touch upon how file permissions are maintained when users come from an SMB or a non-SMB connection? What are best practices?

A. Although NFS and SMB share some common terminology for security (ACLs or Access Control Lists) the implementations are different. The ACL security model in SMB is richer than the NFS file security model. I touched on some of those differences during the Webcast, but my advice is; don’t expect the two security domains of SMB (or Samba, the open source equivalent) and NFS to perfectly overlap. Where possible, try to avoid the requirement, but if you do need the ability to file share, talk to your NFS server supplier. A Google search on “nfs smb mixed mode” will also bring up tips and best practices.

Q. How do you tune and benchmark NFSv4?

A. That’s a topic in its own right! This paper gives an overview and how-to of benchmarking NFS; but it doesn’t explain what you might do to tune the system. It’s too difficult to give generic advice here, except to say that vendors should be relied on to provide their experience. If it’s a commercial solution, they will have lots of experience based on a wide variety of use cases and workloads.

Q. Is using NFS to provide block storage a common use case?

A. No, it’s still fairly unusual. The most common use case is for files in directories. Object and block support are relatively new, and there are more NFS “personalities” being developed, see our ESF Webcast on NFSv4.2 for more information.

Q. Can you comment about file locking issues over NFS?

A. Locking is needed by NFS to maintain file consistency in the face of multiple readers and writers. Locking in NVSv3 was difficult to manage; if a server failed or clients went AWOL, then the lock manager would be left with potentially thousands of stale locks. They often required manual purging. NFSv4 simplifies that by being a stateful protocol, and by integrating the lock management functions and employing timeouts and state, it can manage client and server recovery much more gracefully. Locks are, in the main, automatically released or refreshed after a failure.

Q. Where do things like AFS come into play? Above NFS? Below NFS? Something completely different?

A. AFS is another distributed file system, but it is not POSIX compliant. It influenced but is not directly related to NFS. Its use is relatively small; SMB and NFS dominate. Wikipedia has a good overview.

Q. As you said NFSv4 can hide some of the directories when exporting to clients. Can this operation hide different folders for different clients?

A. Yes. It’s possible to maintain completely different exports to expose or hide whatever directories on the server you wish. The pseudo file system is built separately for each server export. So you can have export X with subdirectories A B and C; or export Y with subdirectories B and C only.

Q. Similar to DFS-N and DFS-R in combination, if a user moves to a different location, does NFS have a similar methodology?

A. I’m not sure what DFS-N and DFS-R do in terms of location transparency. NFS can be set up such that if you can contact a particular server, and if you have the correct permissions, you should be able to see the same exports regardless of where the client is running.

Q. Which daemons should be running on server side and client side for accessing filesystem over NFS?

A. This is NFS server and client specific. You need to look at the documentation that comes with each.

Q. Regarding VMware 6.0. Why use NFS over FC?

A. Good question but you’ll need to speak to VMware to get that question answered. It depends on the application, your infrastructure, your costs, and the workload.

Update: If you missed the live event, it’s now available on-demand. You can also download the webcast slides.

Next Live Webcast: NFS 101

Need a primer on NFS? On March 23, 2016, The Ethernet Storage Forum (ESF) will present a live Webcast “What is NFS? An NFS Primer.” The popular and ubiquitous Network File System (NFS) is a standard protocol that allows applications to store and manage data on a remote computer or server. NFS provides two services; a network part that connects users or clients to a remote system or server; and a file-based view of the data. Together these provide a seamless environment that masks the differences between local files and remote files.

At this Webcast, Alex McDonald, SNIA ESF Vice Chair, will provide an introduction and overview presentation to NFS. Geared for technologists and tech managers interested in understanding:

- NFS history and development

- The facilities and services NFS provides

- Why NFS rose in popularity to dominate file based services

- Why NFS continues to be important in the cloud

As always, the Webcast will be live and Alex and I will be on hand to answer your questions. Register today. Alex and I look forward to hearing from you on March 23rd.

Update: If you missed the live event, it’s now available on-demand. You can also download the webcast slides.

Congestion Control in New Storage Architectures Q&A

We had a great response to last week’s Webcast “Controlling Congestion in New Storage Architectures” where we introduced CONGA, a new congestion control mechanism that is the result of research at Stanford University. We had many good questions at the live event and have complied answers for all of them in this blog. If you think of additional questions, please feel free to comment here and we’ll get back to you as soon as possible.

Q. Isn’t the leaf/spine network just a Clos network? Since the network has loops, isn’t there a deadlock hazard if pause frames are sent within the network?

A. CLOS/Spine-Leaf networks are based on routing, which has its own loop prevention (TTLs/RPF checks).

Q. Why isn’t the congestion metric subject to the same delays as the rest of the data traffic?

A. It is, but since this is done in the data plane with 40/100g within a data center fabric it can be done in near real time and without the delay of sending it to a centralized control plane.

Q. Are packets dropped in certain cases?

A. Yes, there can be certain reasons why a packet might be dropped.

Q. Why is there no TCP reset? Is it because the Ethernet layer does the flowlet retransmission before TCP has to do a resend?

A. There are many reasons for a TCP reset, CONGA does not prevent them, but it can help with how the application responds to a loss. If there is a loss of the flowlet it is less detrimental to how the application performs because it will resend what it has lost versus the potential for full TCP connection to be reset.

Q. Is CONGA on an RFC standard track?

A. CONGA is based on research done at Stanford. It is not currently an RFC.

The research information can be found here.

Q. How does ECN fit into CONGA?

A. ECN can be used in conjunction with CONGA, as long as the host/networking hardware supports it.

New Webcast: Data Center Congestion Control

How do new architectures being deployed in today’ s data centers affect IP-based storage? Find out on September 15th in our next SNIA Ethernet Storage Forum live Webcast, “Data Center Congestion Control,” where we will discuss new architectures and a new congestion control mechanism called CONGA. Developed from research done at Stanford, CONGA is a network-based distributed congestion-aware load balancing mechanism. It is being researched for use in next generation data centers to help enhance IP-based storage networks and is becoming available in commercial switches. This Webcast will dive into:

- A definition of CONGA

- How CONGA efficiently handles load balancing and asymmetry without TCP modifications

- CONGA as part of a new data center fabric

- Spine-Leaf/CLOS architectures

- Affects of 40g/100g in these architectures

- The CONGA impact on IP storage networks

Discover the new data center architectures that will support the most demanding applications such as big data analytics and large-scale web services. As always, this Webcast will be live. I encourage you to register today and bring your questions.

Real-World FCoE Best Practices Q&A

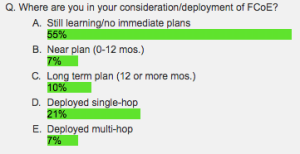

At our recent live Webcast “Real-World FCoE Designs and Best Practices,” IT leaders from Thermo Fisher Scientific and Gannett Co. shared their experiences from their FCoE deployments – one single-hop, one multi-hop. It was a candid discussion on the lessons they learned. If you missed the Webcast, it’s now available on demand. We polled the audience to see what stage of FCoE deployment they’re in (see the poll results at the end of this blog). Just over half said they’re still in learning mode. To that end, here are answers to the questions we got during the Webcast. As you will see, many of these questions were directed to our guest end users regarding their experiences. I hope that it will help you in your journey. If you have additional questions, please ask them in the comments section in this blog and we’ll get back to you as soon as possible.

Q. Have any issues come up where the storage team needed to upgrade SAN switch firmware to solve a problem, but the network team objected to upgrading the FCFs? This assumes a shared firmware release on both network and SAN switch products (i.e. Cisco NX-OS)

A. No we need to work together as a team so as long as it is planned out in advance this has not been an issue.

Q. Is there any overhead at the host CPU level when using FCOE/CNA vs. using FC/HBA? Has anyone done any benchmarking on this?

A. To the host OS it is the CNA that presents a HBA and 10G Ethernet adapter, so to the host OS there is not a difference from what is normally presented for Ethernet and FC adapters. In a software FCoE implementation there might be, but you should check with the particular implementation from the OS vendors for this information.

Q. Are there any high-level performance considerations when compared to typical FC SAN? Any obvious impact to IO latency as hosts are moved to FCoE compared to FC?

A. There is a performance increase in comparison to 8GB Fibre channel since FCoE using Ethernet and 64/66b encoding vs. 8/10b encoding that native 8GB uses. On dedicated links it could be around 50% increase in performance from 10GB FCoE vs. 8GB FC.

Q. Have you planned to use of 40G – FCoE in you edge core design?

A. We have purchased the hardware to go 40G if we choose to.

Q. Was DCB used to isolate the network traffic with FC traffic at the CNA?

A. DCB is a set of technologies that includes DCBX, PFC, ETS that are used with FCoE.

Q. Was FCoE implemented on existing hosts or just on new ones being added to the SAN?

A. Only on new hosts.

Q. Can you expand on Domain_ID sprawl ?

A. In FC or FCoE fabrics each storage vendor supports only a certain amount of switches per fabric. Each full FC or FCoE switch will consume a Domain ID, so it is important to consider how many switches or domain IDs are allowed in a supported fabric based on the storage vendor’s fabric recommendations. There are technologies such as NPIV and vendor specific technologies that can be helpful to limit domain ID sprawl in your fabrics.

Remember the poll I mentioned during the Webcast? Here are the results. Let us know where you are in your FCoE deployment plans.

Webcast Preview: End Users Share their FCoE Stories

Fibre Channel over Ethernet (FCoE) has been growing in popularity year after year. From access layer, to multi-hop and beyond, FCoE has established itself as a true solution in the data center.

Are you interested in learning how customers are using FCoE? Join us on December 10th, at 3:00 pm ET, 1:00 pm PT for our live Webcast, “Real World FCoE Designs and Best Practices“. This live SNIA Webcast examines the most used FCoE designs and looks at how this is being used in REAL world customer implementations. You will hear from two IT leaders who have implemented FCoE and why they did so. We will cover:

- Real-world Use Cases and Customer Implementations of:

- Single-Hop FCoE

- Multi-Hop FCoE

- Use of FCoE for Inter-Switch Links (ISLs)

This will be a vendor-neutral live presentation. Please join us on December 10th and bring your questions for our panel.