The SNIA Networking Storage Forum (NSF) recently took on the topics surrounding data reduction with a 3-part webcast series that covered Data Reduction Basics, Data Compression and Data Deduplication. If you missed any of them, they are all available on-demand.

In Not Again! Data Deduplication for Storage Systems” our SNIA experts discussed how to reduce the number of copies of data that get stored, mirrored, or backed up. Attendees asked some interesting questions during the live event and here are answers to them all.

Q. Why do we use the term rehydration for deduplication? I believe the use of the term rehydration when associated with deduplication is misleading. Rehydration is the activity of bringing something back to its original content/size as in compression. With deduplication the action is more aligned with a scatter/gather I/O profile and this does not require rehydration.

A. “Rehydration” is used to cover the reversal of both compression and deduplication. It is used more often to cover the reversal of compression, though there isn’t a popularly-used term to specifically cover the reversal of deduplication (such as “re-duplication”). When reading compressed data, if the application can perform the decompression then the storage system does not need to decompress the data, but if the compression was transparent to the application then the storage (or backup) system will decompress the data prior to letting the application read it. You are correct that deduplicated files usually remain in a deduplicated state on the storage when read, but the storage (or backup) system recreates the data for the user or application by presenting the correct blocks or files in the correct order.

Q. What is the impact of doing variable vs fixed block on primary storage Inline?

A. Deduplication is a resource intensive process. The process of sifting the data inline by anchoring, fingerprinting and then filtering for duplicates not only requires high computational resources, but also adds latency on writes. For primary storage systems that require high performance and low latencies, it is best to keep these impacts of dedupe low. Doing dedupe with variable-sized blocks or extents (for e.g. with Rabin fingerprinting) is more intensive than using simple fixed-sized blocks. However, variable-sized segmentation is likely to give higher storage efficiency in many cases. Most often this tradeoff between latency/performance vs. storage efficiency tips in favor of applying simpler fixed-size dedupe in primary storage systems.

Q. Are there special considerations for cloud storage services like OneDrive?

A. As far as we know, Microsoft OneDrive avoids uploading duplicate files that have the same filename, but does not scan file contents to deduplicate identical files that have different names or different extensions. As with many remote/cloud backup or replication services, local deduplication space savings do not automatically carry over to the remote site unless the entire volume/disk/drive is replicated to the remote site at the block level. Please contact Microsoft or your cloud storage provider for more details about any space savings technology they might use.

Q. Do we have an error rate calculation system to decide which type of deduplication we use?

A. The choice of deduplication technology to use largely depends on the characteristics of the dataset and the environment in which deduplication is done. For example, if the customer is running a performance and latency sensitive system for primary storage purposes, then the cost of deduplication in terms of the resources and latencies incurred may be too high and the system may use very simple fixed-size block based dedupe. However, if the system/environment allows for spending extra resources for the sake of storage efficiency, then a more complicated variable-sized extent based dedupe may be used. About error rates themselves, a dedupe storage system should always be built with strong cryptographic hash-based fingerprinting so that the error rates of collisions are extremely low. Errors due to collisions in a dedupe system may lead to data loss or corruption, but as mentioned earlier these can be avoided by using strong cryptographic functions.

Q. Considering the current SSD QLC limitations and endurance… Can we say that if a right choice for deduped storage?

A. In-line deduplication either has no effect or reduces the wear on NAND storage because less data is written. Post-process deduplication usually increases wear on NAND storage because blocks are written then later erased–due to deduplication–and the space later fills with new data. If the system uses post-process deduplication, then the storage software or storage administrator needs to weigh the space savings benefits vs. the increased wear on NAND flash. Since QLC NAND is usually less expensive and has lower write endurance than SLC/MLC/TLC NAND, one might be less likely to use post-process deduplication on QLC NAND than on more expensive NAND which has higher endurance levels.

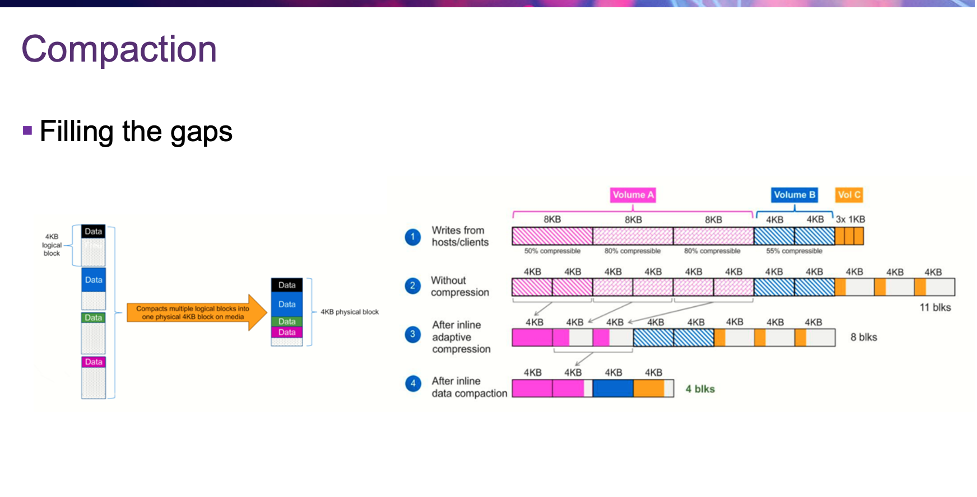

Q. On slides 11/12 – why not add compaction as well – “fitting” the data onto respective blocks and “if 1k file, not leaving the rest 3k of 4k block empty”?

A. We covered compaction in our webcast on data reduction basics “Everything You Wanted to Know About Storage But Were Too Proud to Ask: Data Reduction.” See slide #18 below.

Again, I encourage you to check out this Data Reduction series and follow us on Twitter @SNIANSF for dates and topics of more SNIA NSF webcasts.