For the third time, our storage performance benchmarking experts, Ken Cantrell and Mark Rogov, have generated an abundance of interest (in the form of questions) on block storage performance. If you missed the Webcast, “Storage Performance Benchmarking: Block Components,” it’s available on demand. It was no small effort to answer all the great questions that we received. And for those of you who have been waiting, we apologize, but we think the detailed and thoughtful answers Mark and Ken have put together are well worth the wait.

Q1: Are these numbers applicable to the 90th percentile for any given storage array, please?

Mark: These numbers represent HDD/SSD performance numbers. They aren’t meant to represent any particular storage array vendor’s performance. See the end of our presentation (bottleneck analysis) as to why it is really really hard to answer your question.

Q2: How about NVDIMM-F or NVDIMM-P or NVDIMM-X claiming 3-4M IOPS type of Enterprise storage devices?

Ken: Yup. They’re fast.

There’s a great presentation by Jim Handy titled “Understanding the Intel/Micron 3D XPoint Memory” presented at SDC2015 that I’d recommend you take a look at to understand more about this kind of memory and its possible positioning.

Mark: Great question. I think the conclusion of our presentation answers it. Flash (and we use flash as a collective term, defining everything that is not spinning storage to be “flash”) is drastically faster than spinning drives. But even within Flash, there are plenty of new technologies which compete with each other and improve the overall performance landscape. So, within the scope of our presentation, even a simple good old SLC drive tops the capability of a SAS line. If we improve on one drive, by switching the technology to a faster/newer/better variant (e.g., NVDIMM-F), or by stacking the drives, the resulting set will much more likely expose the limitations of the “regular” storage array.

Q3: I’d like to know which tool you are using to measure IOPS if possible.

Ken: The SNIA Solid State Storage Initiative (SSSI) has developed substantial expertise in the area of SSD performance and behavior. The SSS Performance Test Specifications were developed by the SNIA SSS Technical Work Group (TWG) and define how to measure SSD performance in a manner that is accurate, repeatable and enables comparison between different manufacturers’ products. Learn more about the SSD Performance Project here.

All of the Flash and HDD numbers at the beginning of the presentation were taken directly from the Solid State Storage Performance Test Specification summary results (SSS PTS). The SSS PTS provides a comprehensive method for measuring flash performance in the most vendor neutral approach that I’ve seen.

The Flash and HDD numbers at the end of the presentation were 80% of the starting numbers – scaled down to make them slightly more like what we’ve seen in a greater number of environments (that aren’t pushing their drives as hard).

Q4: Throughputs with SSD is not as much as one can get from a spinning drive when one keeps cost/GB on the axis. Comments please.

Ken: Now we have 3 axes? I’m not even sure how to visualize what you’re asking, but I’m pretty sure I understand the intent … and this is a harder question than it would appear on the surface. Why?

- First off, prices aren’t my thing – I tend to focus on the internals and let the sales guys talk prices. Additionally, vendors often engage in significant discounting or bundling that makes it difficult for the average person (i.e., me) to understand true costs.

- The astounding random I/O performance of flash enables support for compression and deduplication without dramatically increasing client-perceived latency. There’s a reason you see so many vendors offering inline deduplication and inline compression now when they did not even five years ago – flash is the enabler that makes this happen. So what is the true comparison? Raw HDD vs Raw flash? Or Raw HDD vs flash plus the storage efficiency (SE) savings it enables? If flash with SE features (dedupe and compression), then what is the savings that you can/should expect for your dataset? 1.5x? 5x? 50x? Knowing this is a prerequisite to answering the question, and the answer will be dependent both on the vendor’s features and your own data set characteristics.

- As we discussed in the first session, if your application/user base have some sort of minimum performance expectations, particularly around latency, then HDDs may simply not be able to provide you the performance you need. You DID mention throughput (IOPS?) explicitly and with IOPS, OPS, or data rates (MB/s), you can always match flash data rates with HDDs – it just might take a LOT more HDD drives than flash devices. Latency/response time is different though – depending on whether you are drive bound and what your I/O characteristics look like (read vs write, random vs sequential), you may simply be unable to ever hit your latency targets with HDD.

- The world, it is a-changing. six years ago it was easy to say “SSD for performance sensitive niche applications!” and smile. Today, prices continue to drop, vendors are making new decisions around the use of consumer grade vs enterprise grade flash, and overall flash/SSD is moving much more mainstream. And … consider the new 16TB (yes 16 TERABYTE) SSD drives announced by Samsung. My personal view (and I’m explicitly disclaiming that I’m speaking on my behalf, and not NetApp’s – which honestly, you should assume for all my answers) is that these are going to change the landscape almost as dramatically as SSD itself has.

- There are definitely vendors that believe in the cost benefits of HDD. We chose not to mention specific vendors in the webcast, but consider BackBlaze. In their blog, they are extremely open about how they have configured their data center – and they are an (all?) HDD shop. In fact, “by the end of 2015, the Backblaze datacenter had 56,224 spinning hard drives containing customer data.” Speaking of Backblaze, you might be interested in their assessment of the 16TB drive, for their shop.

You might also be interested in slide 21 of the following, which includes some price/performance numbers from EMC and Oracle.

Q5: Does NVMe drive technology move things to a higher level?

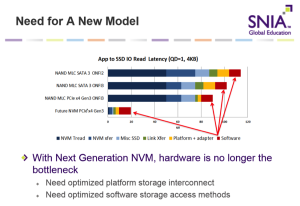

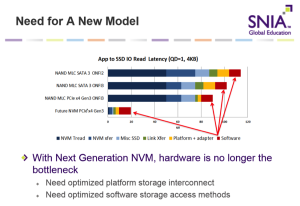

Ken: If you truly mean NAND-based flash accessed via NVMe instead of SAS/SATA, yes. Look at the perf results linked out of question 3. If you mean the use of next-generation non-volatile memory (NVM) instead of NAND-based flash, then yes. The following chart is contained in a lot of SNIA presentations; I It does a good job of pointing out just how much faster we can get.

I also strongly recommend a look through of Advances in Non-Volatile Storage Technologies by Tom Coughlin from Coughlin Associates. If you care about these topics, the SNIA Storage Developer Conference is a great opportunity to learn more.

Q6: Why NAND gates and not AND gates?

Mark: NAND and NOR gates are known as “universal gates”–they can be combined in various groups and combinations to do any basic operations, i.e., AND, NOT, OR, etc. So, flash manufacturers had to choose between NAND and NOR. And just like with any technology, the price drove the choice. NAND gates are simply cheaper and slower. NORs are faster and more expensive. Actually, there are some NOR products in the market.

Q7: Mark accidently said 15K was 15,000/sec when it’s 15,000/minute.

Ken: Thanks! (Shame on you Mark!)

Mark: Thank you… I can’t believe that I misspoke! I never do! Never! Ahh!!!

Mark’s Lawyer: On behalf of my client, I move to remove this question and the digital recording from Exhibit A to Exhibit B (aka “never again section”)

Q8: Do you guys have any data about how expensive an erase-modify-write operation is, compared with spinning disks in terms of performance?

Ken: This is what we were attempting to demonstrate in the first set of slides. The PTS (see question 3) forces flash devices into a steady state mode where they are continuously doing program-erase cycles. So the results shown there demonstrate the difference between HDD writes (seek, spin, write) and flash writes (erase and program).

Your question made me wonder though … so I also did a quick literature search. Interesting to see how rates have changed over time, and how they vary by device:

From M-Systems, in 2002: Erase cycle was 3ms

From Micron, in 2006: The erase time for a 128KB erase block was 500 µs

From AnandTech, in 2012: Erase time for SLC was 1.5-2ms, MLC was 3ms and TLC was ~4.5ms (huh? SLC vs MLC vs TLC?)

Q9: Why can’t the pointer be at the page level instead of a block level (say, metadata within a block)? I’m sure that there is a reason. What do we gain by treating an entire block as a monolithic?

Mark: This is an excellent question to ask Google. I think the reasons for selecting a NAND gate technology, and for bundling a bunch of NAND gates into groups and for creating blocks (in essence, super groups) is power. It takes less power to operate the drives with NAND gates and blocks.

Q10: I heard someone mention NOR gates, instead of NAND, are NOR gates persistent, over a power cycle?

Ken. Yes.

Mark: There are plenty of other “Logic gates” see this article on Wikipedia for more information.

Q11: So, there is no advantage in keeping IO sequentially in an SSD?

Ken: Technically, or practically? Technically speaking, I think it does matter. Micron documented this in 2006, noting that “Random access time on NOR Flash is specified at 0.075μs; on NAND Flash, random access time for the first byte only is significantly slower—25μs (see Table 2 on page 5). However, after initial access has been made, the remaining 2111 bytes are shifted out of NAND at a mere 0.025μs per byte.” The raw numbers have changed over the years, but I don’t believe the principle has. Violin Memory stated in 2013 that, “The idea of sequential I/O doesn’t exist with flash memory, because there is no physical concept of blocks being adjacent or contiguous. Logically, two blocks may have consecutive block addresses, but this has no bearing on where the actual information is electronically stored. You might therefore say that all flash I/O is random, but in truth the principles of random I/O versus sequential I/O are disk concepts so they don’t really apply.”

Practically speaking, I agree. Sequential vs random I/O is irrelevant for flash. Given (a) average I/O sizes for workloads and (b) the incredible performance of flash devices compared to the needs of the vast majority of people using them, it doesn’t much matter if you can access subsequent bytes in a NAND-based flash device faster than you can access the first bytes. They are plenty fast enough.

Note that it is hard to find public info on this. Sequential I/O tends to use larger I/O sizes, and random I/O uses smaller I/O sizes. So finding apples-to-apples comparisons between sequential and random I/O is difficult.

Mark: Yes, the flash drive doesn’t care anymore. But the hosts and application still do. Where it matters is in the workloads. Ken and I are still planning to dedicate an entire hour talking about workloads, and Random vs. Sequential will surely be a large part of it. However, we will admit that in the future, when all storage will be flash (which is, of course, a pipe dream) it won’t matter anymore.

Q12: What is the acceptance level to Erasure Coding, and hence the change in the way Storage Performance testing will change?

Mark: As we said during the webcast, RAID is a special case of Erasure Coding. Therefore its acceptance rate is 100% J But on a more serious note, Erasure Coding is necessary for any scale out system: and every vendor uses their own N+M rules.

Q13: Is RAID-1 always half the write performance? If the writes go to both drives simultaneously, I could see write performance being less than 100% of what one drive can do, but not half.

Ken: This was asked in a dry run as well. You’ve hit on something that seems to be a sticking point for multiple people. Perhaps consider it this way. It looks mathy and complicated, but bear with me …

Consider two physical drives. Call them P1 and P2.

Let the write performance (in iops) of P1 be P1w.

Let the write performance (in iops) of P2 be P2w.

How fast can P1 write? P1w.

How fast can P2 write? P2w.

If you can write to both P1 and P2 at the same time, independently, and completely in parallel, how fast can you write in aggregate? P1w + P2w.

For the previous question, what if P1w = P2w?

Then P1w + P2w = P1w + P1w = (2)*P1w.

Now …

Consider a RAID-1 pair comprised of the same P1 and P2. Call it R1.

Writes can be sent (in a good implementation) to both P1 and P2 at the same time.

But, before a write is considered complete, it must be acknowledged by BOTH P1 and P2.

If P1w > P2w, what is the best performance of R1? P2w. P2 is slower, so we’ll always be waiting on it (assuming performance is consistent), so the best we can do is P2w.

Same logic if P1w < P2w.

What if P1w = P2w? What is the best performance of R1? Same logic … but since they are the same speed, it is simply P1w.

So …

In the non-RAID-1 case, our performance (assuming P1w = P2w) was 2 * P1w.

In the RAID-1 case, our performance (assuming P1w = P2w) is P1w.

50% reduction.

RAID-1 only achieves ½ of what the physical pair could.

Mark: What Ken said.

Q14: Is there any kind of “asynch” RAID1 so that I can keep the performance of the disks but keep the mirroring?

Ken: See the previous answer also.

For reads, certainly. For writes, not that I know of, although you can make it much less visible. For example, if you have a caching RAID controller/system, your writes will go to memory and then go to disk whenever the controller/system decides to flush it. Perhaps it is big enough that it turns random I/O into sequential I/O (and you’re on HDDs) and the perf improvement from doing sequential instead (instead of random) is enough you don’t notice the effect of RAID itself.

Mark: I think that in reality, the behavior of a particular implementation is always vendor-dependent. Generally speaking, RAID1 does allow the reading from both drives, but budgets or software bugs or just plain ignorance could result in an implementation where that is not true. Address vendor documentation to know for sure.

Q15: Why do you need to read old parity to recalculate and write a new one? Isn’t the parity only calculated based on the data being written?

Ken: See answer to question #14.

Mark: It is a math trick… reading the parity saves reading the rest of the blocks on the full stripe. With 3 drives the savings are non-obvious, but with 5 or 14 there are significant.

Q16: This calculation is correct for 3 disks, right? If there are more disks and partial write is for stripe on single drive then you need to read more to calculate parity

Ken: No. There are some great write-ups about how RAID-5 works. Instead of pasting those here, I strongly encourage you to visit http://rickardnobel.se/how-raid5-works/ AND http://rickardnobel.se/raid-5-write-penalty/ and then tweet Mark (@markrogov) or Ken (@kencantrelljr) with questions/follow-up.

(I have no connection to Rickard … I just think he’s done a great job in his write-up.)

Mark: Yes, Rickard’s write up is spot on. Our goal is to introduce a fairly complex subject in a deceivingly simple manner. There are many edge cases that we don’t address: partial write to sector, partial write to a block, partial write a stripe… all those have their own consequences, and storage vendors deal with those differently.

Q17: I am also interested in Data Recovery on NAND technology

Ken: Me too. It isn’t a topic we’re planning to cover though.

Q18: Does caching write data help when one uses SSD?

Ken: It can. Memory is still faster than flash. It depends entirely on how the memory is used. For example, with writes, if memory were used as a write-through cache (look it up if you need), it wouldn’t make things faster. If it were used as a write-back cache, it would. If it is used as a read cache, it will almost certainly make reads of data faster. But even there, life is never simple. Why? Because if you’re using memory to cache data, you’re not using it for something else … and it is possible that the memory could be better used for caching metadata, for example.

Mark: Here, I’d like to recall our good friend, Dr. J Metz, who created an excellent presentation on comparing computer caches to pizza delivery in “Life of a Storage Packet (Walk)” And in his example, caching will keep the pizza warmer. Even if a flash drive is used.

Q19: If the customer is interested in throughput in MB/s then they probably won’t do IOs with 4KB size…

Ken: Agreed. I’m fairly certain that you’re referring to adding MB/s numbers on slide 41. We had a discussion about doing that when putting the slides together. The transition between slide 40 and 42 changed the I/O size from 4KiB to 128KiB, changed from writes to reads, and changed from random I/O to sequential I/O. Adding the MB/s numbers to slide 40/41 was meant to ease the transition between slide 40 and 42. You’re absolutely right though … rarely does anyone want to talk data rates (MB/s) when using small I/O sizes.

Mark: Agreed. Although a true performance guru would recognize that these are the two sides of the same coin.

Update: This webcast is part of a series on storage performance benchmarking. Check out the others: