Our recent SNIA ESF Webcast, “The Evolution of iSCSI” drew a big and diverse group of attendees. From beginners looking for iSCSI basics, to experts with a lot of iSCSI deployment experience, there were plenty of good questions. Our presenters, Andy Banta and Fred Knight, did a great job answering as many as they could during the live event, but we didn’t have time to get to them all. So here are answers to them all. And by the way, if you missed the Webcast, it’s now available on-demand.

Q. What are the top 3 reasons to choose iSCSI over FC SAN?

A. 1. Use of commodity equipment and protocols. It means that you don’t have to set up a completely separate network. It means you don’t have to buy separate HBAs. 2. Inherent networking capability. Built on top of TCP/IP, it benefits from any networking technology to come along. These include routing, tunneling, authentication, encryption, etc. 3. Ease of automation and configuration. In it’s simplest form, an iSCSI host only needs to know the IP address of the target system. In more complex systems, hosts and storage provide APIs to allow automation through scripting or management tools.

Q. Please comment on why SCSI went from being a widely used protocol for all sorts of devices to being focused as only essentially a storage protocol?

A. SCSI was originally designed as both a protocol and a bus (original Parallel SCSI). Because there were no other busses, the SCSI bus did it all; disks, tapes, scanners, printers, Optical (CDs), media changers, etc. As other busses came onto the market (think USB), many of those devices moved to the new bus (CDs, printers, scanners, etc.) Commodity devices used commodity busses (IDE, SATA, USB), and enterprise devices used enterprise busses (FC, SAS); and so, disks, tapes, and media changers mostly stayed on SCSI.

The name SCSI can be confusing for some, as the term originally was used for both the SCSI protocol and the SCSI bus. The term for the SCSI protocol is all that remains today; the SCSI bus (the old SCSI parallel bus) is no longer in wide use. Today, the FC bus, or the SAS bus, or the SoP bus, or the SRP bus are used to carry the SCSI protocol. The SCSI Architecture Model (SAM) describes a very distinct separation between the device layer (the SCSI protocol) and the transport layer (the bus).

And, the SCSI command set has become the basis for many subsequent command sets. The JEDEC group used the SCSI command set as a model (JEDEC devices are in your cell phone), the ATAPI devices used SCSI commands, and many SCSI commands and SATA commands have a common heritage. The Mt. Fuji group (a standards group in Japan) also uses SCSI as the basis for new DVD and BlueRay devices. So, while not widely known, the SCSI command family has grown well beyond what is managed by the ANSI/INCITS T10 committee that originally defined SCSI in to a broad set of capabilities that are used across the industry, by a broad group of organizations. But, that all said, scanners and printers are still on USB, and SCSI is almost all about storage in one form or another.

Q. How does iSCSI support software-defined storage?

A. Answered during the talk. SDS provides more automation and knobs on the storage capabilities. But SDS still needs a way to transport the storage and iSCSI works perfectly fine for that. They are complementary technologies, not competing.

Q. With 40Gb and faster coming soon to a server near you, what kind of impact will that have on CPU utilization? Will smaller servers be able to push that much traffic?

A. More throughput simply requires more CPU. With good multithreaded drivers available, this can mean simply adding cores to keep the pipe as full as possible. As we mentioned near the end, using iSCSI with RDMA lightens the load on the CPU even more, so you’ll probably be seeing more of that.

Q. Is IPSec commonly supported on iSCSI targets?

A. Yes, IPsec is required to be implemented on an iSCSI target to be a compliant device. However, it is not commonly enabled by customers. If they MUST provide IPsec there are a lot of non-compliant initiators and targets on the market.

Q. I’m told direct connect with iSCSI is discouraged, that there should be a switch in place to handle the buffering, latency, acknowledgement etc….. Is this true or a best practice to make sure switches are part of the design?

A. If you have no need to connect to multiple targets or multiple initiators, there’s no harm in direct connections.

Q. Ethernet was not designed to support storage traffic. The TCP/IP protocol suite was not designed to support storage traffic. SCSI was not designed to be encapsulated. So TCP/IP FTW? I think not. The reason iSCSI is exists is [perceived] cost savings. I get fed up with people constantly looking for ways to squeeze another penny out of something. To me it illustrates that they’re not very creative. Fibre Channel is a stupid name, but it is a purpose built protocol that works as designed to.

A. Ethernet is a general purpose network. It is capable of handling lots of different traffic (including storage). By putting iSCSI onto an existing Ethernet infrastructure, it can (as you point out) create a substantial cost savings over installing a FC network (although that infrastructure savings comes with other costs – such as the impact of a shared wire). However, installing a dedicated Ethernet network provides many of the advantages of a dedicated FC network, but at an added cost over that of a shared Ethernet infrastructure. While most consider FC a purpose-built storage network, it is worth pointing out that some also consider it a general purpose network (for example FC-Avionics is built into Fighter Jets, and it’s not for storage). And while not designed to be encapsulated, (it was designed for a parallel bus), SCSI today is encapsulated on every transport that carries it (yes, that includes FCP and SAS).

There are many kinds of storage at different price points, USB storage, SATA devices, rotating media (at different RPMs), SSD devices, SAS devices, FC devices, single spindles, arrays, cloud, drop boxes, etc., all with the corresponding transport wires. iSCSI is one of those wires. Each protocol and wire offer specific advantages and disadvantages. There can be a lot of confusion about which to use, but just as everyone does not drive the same type car (a FORD FUSION for example), everyone does not need the same type of storage (FC devices/arrays). Yes, I drive a FORD FUSION, and I like FC storage, but I use a USB stick on my laptop, and I pray my bank never puts my financial records out in the cloud. Selecting the right storage (and wire) for the job at hand can be one of a system administrators most interesting problems to solve.As for the name – that is often what happens in committees…

Q. As a best practice for Windows servers, disable hardware acceleration features in NICs (TOE etc.)? Are any NIC features valuable given modern multicore CPUs?

A. Yes. Typically the only reason to disable TOE is that multiple or virtual TCP/IP stacks are going to be using the same NIC. TSO, LRO and jumbo frames will benefit any OS that can take advantage of them.

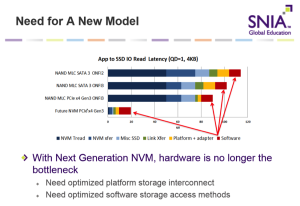

Q. What is the advantage of iSCSI when compared with NVMe?

A. NVMe and iSCSI are very different protocols. NVMe started life as a direct attach protocol to communicate to native PCIe devices (not even outside the box). iSCSI was a network protocol from day one. iSCSI has to deal with the potential for long network induced delays, and complex out of order error recovery issues. NVMe operates over an interlocked bus, and as such, does not have those issues.

But, NVMe is now being extended over fabrics. NVMe over a RoCE V1 transport will be a data center network (since there is no IP routing). NVMe over a RoCE V2 transport or an iWARP transport will have the same routing capabilities that iSCSI has. When it comes to the raw command set, they are very similar (but there are some differences). SCSI is a more full featured command set than NVMe – it has been developed over a span of over 25 years, and has developed solutions for all the problems that have been discovered during that time span. NVMe has a more limited (or more focused) command set (for example, there are no tape commands in the NVMe command set). iSCSI is available today, as is direct attach NVMe, but NVMe over Fabrics is still in the development phases (the specification is expected to be available the first week of June, 2016). NVMe products will take some time to mature and to develop solutions for the problems they have not discovered yet. Another example of this is the ability to support shared storage – it existed on day one in iSCSI, but did not exist in the first NVMe specification. To support shared storage in NVMe over Fabrics, that capability has since been added, and it was done using a SCSI compatible method (to make it easier for host S/W that already performs this function).

There is a large community working to develop NVMe over Fabrics. As memory based storage device get cheaper, and the solution space matures, NVMe will become more attractive.

Q. How often do iSCSI installations provide encryption of data in flight? How: IPsec, IKEv2-SCSI + ESP-SCSI, etc.?

A. Rarely. More often than not, if in-flight data security is needed, it will be run on an isolated network. Well under 100% of installations are 100% compliant. VMware never qualified IPsec with iSCSI and didn’t have any obvious switch to turn it on. Side note: We standards guys can be overly picky about words. Since the question is “provide” the answer is – 100% of compliant installations PROVIDE encryption (IPsec V2 – see above), however, in practice, installations that require that type of security typically run on isolated networks, rather than turn on encryption.

Q. How do multiple independent applications inside the same initiator map to iSCSI sessions to the same target? E.g., iSCSI session one-to-one with application?

A. There is no relationship between applications and sessions. When an iSCSI initiator discovers a target, the initiator logs in and establishes a session. If iSCSI MCS (multi connection session) is being used, multiple TCP connections may be established and used in parallel to process operations for that session.

Applications send reads and writes to the operating system. Those IO requests make their way through the file system and caching layers into the device driver. The device driver issues the IO request to the device (over the iSCSI session) and retains information about that IO. When a completion is received from a device (the WRITE command or READ command completed), it is matched up with the request. That completion status (success or error) is passed back through the operating system (file system, etc.) to the application. So it is the responsibility of the device driver to mux/demux the requests from all the applications out over the iSCSI session and track the responses as the operations are completed.

When an operating system is using MPIO (multi-pathing), then the device driver may create multiple sessions between the initiator and the target. This is where operating system MPIO policies such as round-robin, shortest queue, LRU, etc. come into play. In this case, the MPIO driver will send an IO operation to the device using what it considers to be the most appropriate path (based on the selected policy). But again, there is no relationship between the application and the path used for IO (any application can have it’s IO send via any path).

Today, MPIO is used more commonly than MCS.

Q. Will Microsoft iSCSI implement iSER?

A. This is a question for Microsoft or iSER-capable NIC vendor that provides Microsoft drivers.

Q.Zadara has some iSER deployments using Linux and VMware clients going to the Zadara cloud storage.

A. There’s an answer, all by itself.

Q. In the case of iWARP, the TCP layer takes care of out-of-order IP packet receptions. What layer does the out-of-order management of packets in ROCE ?

A. RoCE headers contain a 24 bit “Packet Sequence Number” that is used to validate the required ordering and detect lost packets. As such, ordering still occurs, just in a different way.

Q. Correction: RoCE is over Ethernet packets and is not routable. RoCEv2 is the one over UDP/IP and *is* routable.

A. You are correct. RoCE is not routable by IP. RoCE transmits raw Ethernet frames with just Ethernet MAC headers and no IP headers, and as such, it is not routable by IP. RoCE V2 puts the information into UDP packets (with appropriate IP headers), and therefore it is routable by IP.

Q. How prevalent is iSER today in deployment? And what are some of the typical applications that leverage iSER?

A. Not terribly prevalent today, but higher speed Ethernet might drive more adoption, due to the CPU savings demonstrated.

Update: If you missed the live event, it’s now available on-demand. You can also download the webcast slides.