As one Cisco colleague once said to me, “After the nuclear holocaust, there will be two things left: cockroaches and Ethernet.” Not sure I like Ethernet’s unappealing company in that statement, but the truth it captures is that Ethernet, now entering its fifth decade (wow!), is ubiquitous and still continuing to advance at a breathtaking pace. And as it advances, it advances the capabilities of storage networking based on the Ethernet backbone, be it file storage like NFS or SMB or block storage like iSCSI or FCoE.

Most recent evidence of Ethernet’s continuing and relentless evolution is illustrated in the 28 March 2014 announcement from the Ethernet Alliance congratulating the IEEE on formation of their IEEE P802.3bsâ„¢ Task Force:

The new group is chartered with the development of the IEEE P802.3bs 400 Gigabit Ethernet (GbE) project, which will define Ethernet Media Access Control (MAC) parameters, physical layer specifications, and management parameters for the transfer of Ethernet format frames at 400 Gb/s. As the leading voice of the Ethernet ecosystem, the Ethernet Alliance is ideally positioned to support this latest move towards standardizing and advancing 400Gb/s technologies through efforts such as the launch of the Ethernet Alliance’s own 400 GbE Subcommittee.

Ethernet is in production today from multiple vendors at 40GbE and supports all storage protocols, including FCoE, at those speeds. Market forecasters expect the first 100GbE adapters to appear in 2015. Obviously, it is too early to forecast when 400GbE will arrive, but the train is assuredly in motion. And support for all the key storage protocols we see today on 10GbE and 40GbE will naturally extend to 100GbE and 400GbE. Jim O’Reilly makes similar points in his recent Information Week article, “Ethernet: The New Storage Area Network“ where he argues, “Ethernet wins on schedule, cost, and performance.”

Beyond raw transport speed, the rich Ethernet infrastructure offers techniques to catapult your performance even beyond the fastest single-pipe speed. The Ethernet world has established techniques for what is alternately referred to as link aggregation, channel bonding, or teaming. The levels available are determined by the capabilities provided in system software and what switch vendors will support. And those capabilities, in turn, are determined by what they respectively see as market demand. VMware, for example, today will let you bond eight 10GbE channels into a single 80GbE pipe. And that’s today with mainstream 10GbE technology.

Ethernet will continue to evolve in many different ways to support the needs of the industry. Serving as a backbone for all storage networking traffic is just one of many such roles for Ethernet. In fact, precisely because of the increasing breadth of usage models Ethernet supports, it will also continue to offer cost advantages. The argument here is a very simple volume argument:

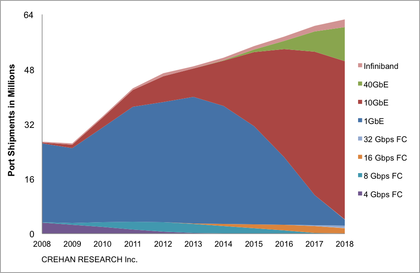

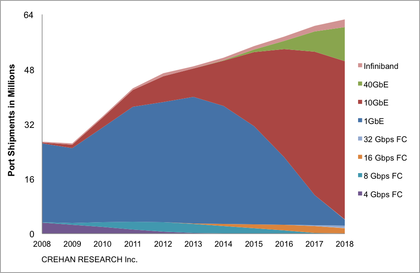

Total Server-class Adapter and LOM Market Ports

Enough said, except to also note that volume is what funds speed roadmaps.