Our recent Webcast: 10GbE – Key Trends, Drivers and Predictions was very well received and well attended. We thank everyone who was able to make the live event. For those of you who couldn’t make it, it’s now available on demand. Check it out here.

There wasn’t enough time to respond to all of the questions during the Webcast, so we have consolidated answers to all of them in this blog post from the presentation team. Feel free to comment and provide your input.

Question: When implementing VDI (1000 to 5000 users) what are best practices for architecting the enterprise storage tier and avoid peak IOPS / Boot storm problems? How can SSD cache be used to minimize that issue?

Answer: In the case of boot storms for VDI, one of the challenges is dealing with the individual images that must be loaded and accessed by remote clients at the same time. SSDs can help when deployed either at the host or at the storage layer. And when deduplication is enabled in these instances, then a single image can be loaded in either local or storage SSD cache and therefore it can be served the client much more rapidly. Additional best practices can include using cloning technologies to reduce the space taken up by each virtual desktop.

Question: What are the considerations for 10GbE with LACP etherchannel?

Answer: Link Aggregation Control Protocol (IEEE 802.1AX-2008) is speed agnostic. No special consideration in required going to 10GbE.

Question: From a percentage point of view what is the current adoption rate of 10G Ethernet in data Centers vs. adoption of 10G FCoE?

Answer: As I mentioned on the webcast, we are at the early stages of adoption for FCoE. But you can read about multiple successful deployments in case studies on the web sites of Cisco, Intel, and NetApp, to name a few. The truth is no one knows how much FCoE is actually deployed today. For example, Intel sells FCoE as a “free” feature of our 10GbE CNAs. We really have no way of tracking who uses that feature. FC SAN administrators are an extraordinarily conservative lot, and I think we all expect this to be a long transition. But the economics of FCoE are compelling and will get even more compelling with 10GBASE-T. And, as several analysts have noted, as 40GbE becomes more broadly deployed, the performance benefits of FCoE also become quite compelling.

Question: What is the difference between DCBx Baseline 1.01 and IEEE DCBx 802.1 Qaz?

Answer: There are 3 versions of DCBX

– Pre-CEE (also called CIN)

– CEE

– 802.1Qaz

There are differences in TLVs and the ways that they are encoded in all 3 versions. Pre-CEE and CEE are quite similar in terms of the state machines. With Qaz, the state machines are quite different — the

notion of symmetric/asymmetric/informational parameters was introduced because of which the way parameters are passed changes.

Question: I’m surprise you would suggest that only 1GBe is OK for VDI?? Do you mean just small campus implementations? What about multi-location WAN for large enterprise if 1000 to 5000 desktop VMs?

Answer: The reference to 1GbE in the context of VDI was to point out that enterprise applications will also rely on 1GbE in order to reach the desktop. 1GbE has sufficient bandwidth to address VoIP, VDI, etc… as each desktop connects to the central datacenter with 1GbE. We don’t see a use case for 10GbE on any desktop or laptop for the foreseeable future.

Question: When making a strategic bet as a CIO/CTO in future (5-8 years plus) of my datacenter, storage network etc, is there any technical or biz case to keep FC and SAN? Versus, making move to 10/40GbE path with SSD and FC? This seems especially with move to Object Based storage and other things you talked about with Big Data and VM? Seems I need to keep FC/SAN only if vendor with structured data apps requires block storage?

Answer: An answer to this question really requires an understanding of the applications you run, the performance and QOS objectives, and what your future applications look like. 10GbE offers the bandwidth and feature set to address the majority of application requirements and is flexible enough to support both file and block protocols. If you have existing investment in FC and aren’t ready to eliminate it, you have options to transition to a 10GbE infrastructure with the use of FCoE. FCoE at its core is FCP, so you can connect your existing FC SAN into your new 10GbE infrastructure with CNAs and switches that support both FC and FCoE. This is one of the benefits of FCoE – it offers a great migration path from FC to Ethernet transports. And you don’t have to do it all at once. You can migrate your servers and edge switches and then migrate the rest of your infrastructure later.

Question: Can I effectively emulate or out-perform SAN on FC, by building VLAN network storage architecture based on 10/40GBe, NAS, and use SSD Cache strategically.

Answer: What we’ve seen, and you can see this yourself in the Yahoo case study posted on the Intel website, is that you can get to line rate with FCoE. So 10GbE outperforms 8Gbps FC by about 15% in bandwidth. FC is going to 16 Gbps, but Ethernet is going to 40Gbps. So you should be able to increasingly outperform FC with FCoE — with or without SSDs.

Question: If I have large legacy investment in FC and SAN, how do cost-effectively migrate to 10 or 40 GBe using NAS? Does it only have to be greenfield opportunity? Is there better way to build a business case for 10GBe/NAS and what mix should the target architecture look like for large virtualized SAN vs. NAS storage network on IP?

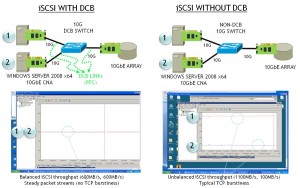

Answer: The combination of 10Gb converged network adapter (CNA) and a top of the rack (TOR) switch that supports both FCoE and native FC allows you to preserve connectivity to your existing FC SAN assets while taking advantage of putting in place a 10Gb access layer that can be used for storage and IP. By using CNAs and DCB Ethernet switches for your storage and IP access you are also helping to reduce your CAPEX and OPEX (less equipment to buy and manage using a common infrastructure. You get the added performance (throughput) benefit of 10G FCoE or iSCSI versus 4G or 8G Fibre Channel or 1GbE iSCSI. For your 40GbE core switches so you have t greater scalability to address future growth in your data center.

Question: If I want to build an Active-Active multi-Petabyte storage network over WAN with two datacenters 1000 miles apart to primarily support Big Data analytics, why would I want to (..or not) do this over 10/40GBe / NAS vs. FC on SAN? Does SAN vs. NAS really enter into the issue? If I got mostly file-based demand vs. block is there a tech or biz case to keep SAN ?

Answer: You’re right, SAN or NAS doesn’t really enter into the issue for the WAN part; bandwidth does for the amount of Big Data that will need to be moved, and will be the key component in building active/active datacenters. (Note that at that distance, latency will be significant and unavoidable; applications will experience significant delay if they’re at site A and their data is at site B.)

Inside the data center, the choice is driven by application protocols. If you’re primarily delivering file-based space, then a FC SAN is probably a luxury and the small amount of block-based demand can be delivered over iSCSI with equal performance. With a 40GbE backbone and 10GbE to application servers, there’s no downside to dropping your FC SAN.

Question: Are you familiar with VMware and CISCO plans to introduce a Beat for virtualized GPU Appliance (aka think Nivdia hardware GPUs) for heavy duty 3D visualization apps on VDI? No longer needing expensive 3D workstations like RISC based SGI desktops. If so, when dealing with these heavy duty apps what are your concerns for network and storage network?

Answer: I’m afraid I’m not familiar with these plans. But clearly moving graphics processing from the client to the server will add increasing load to the network. It’s hard to be specific without a defined system architecture and workload. However, I think the generic remarks Jason made about VDI and how NVM storage can help with peak loads like boot storms apply here as well, though you probably can’t use the trick of assuming multiple users will have a common image they’re trying to access.

Question: How do I get a copy of your slides from today? PDF?

Answer: A PDF of the Webcast slides is available at the SNIA-ESF Website at: http://www.snia.org/sites/default/files/SNIA_ESF_10GbE_Webcast_Final_Slides.pdf