Following up from the previous blogpost on iSCSI over DCB, this blogpost highlights just some of the benefits that DCB can deliver. Welcome back.

DCB extends Ethernet by providing a network infrastructure that virtually eliminates packet loss, enabling improved data networking and management within the DCB network environment with features for priority flow control (P802.1Qbb), enhanced transmission selection (P802.1Qaz), congestion notification (P802.1Qau), and discovery. The result is a more deterministic network behavior. DCB is enabled through enhanced switches, server network adapters, and storage targets.

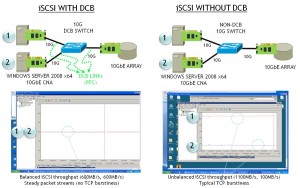

DCB delivers a “lossless” network, and makes the network performance extremely predictable. While standard Ethernet performs very well, its performance varies slightly (see graphic). With DCB, the maximum performance is the same, but performance varies very little. This is extremely beneficial for data center managers, enabling them to better predict performance levels and deliver smooth traffic flows. In fact, when under test, DCB eliminates packet re-transmission because of dropped packets making not only the network more efficient, but the host servers as well.

SEGREGATING AND PRIORITIZING TRAFFIC

In the past, storage networking best practice recommendations included physically separating data network from storage network traffic. Today’s servers commonly have quad-port GbE adapters to ensure sufficient bandwidth, so segregating traffic has been easy – for example, two ports can be assigned for storage networks and two for data networks. In some cases these Ethernet adapters aggregate ports together to deliver even greater throughput for servers.

With the onslaught of virtualization in the data center today, consolidated server environments have a different circumstance. Using virtualization software can simplify connectivity with multiple 10 GbE server adapters – consolidating bandwidth instead of distributing it among multiple ports and a tangle of wires. These 10 GbE adapters handle all the traffic – database, web, management, and storage – improving infrastructure utilization rates. But with traffic consolidated on fewer larger connections, how does IT segregate the storage and data networks, prioritize traffic, and guarantee service levels?

Data Center Bridging includes prioritization functionality, which improves management of traffic flowing over fewer, larger pipes. In addition to setting priority queues, DCB can allocate portions of bandwidth. For example, storage traffic can be configured as higher priority than web traffic – but the administrator can allocate 60% of bandwidth to the storage traffic and 40% to the Web, ensuring operations and predictable performance for all.

Thanks again for checking back here at this blog. Hopefully, you find this information useful and a good use of your last four minutes, and if you have any questions or comments, please let me know.

Regards,

Gary