I guess this is a blog that could either be very short or very long… The full name of the protocol – Data Center Bridging capability eXchange (DCBX) basically tells you all you need to know or maybe nothing at all. At its simplest, DCBX does what it says on the tin and the way it is in effect used is no more or less than the DCB auto negotiation capability to make sure that the data center network is correctly and consistently configured. It is important to note that technically you can debate if this is an auto negotiation protocol or not, but in reality that’s how it is actually used.

Now it is important to note that there are many misnomers around DCB itself. Let’s remember that DCB is actually a group within IEEE responsible for many separate standards – basically anything for Ethernet (or as IEEE say bridging) that is assumed to be specific to the data center. Currently, discussed are those standards and protocols related to I/O Convergence (PFC, ETS, QCN, DCBX) and those related to server virtualization (Virtual Ethernet Port Aggregator or VEPA and others). So in essence the intent of DCBX is to help two adjacent devices share information about how these protocols are, or need to be, configured. DCBX actually does this by leveraging good old LLDP – just as PFC, ETS and QCN leverage 802.1p. What is particularly nice though is that DCBX not only allows the simple exchange of information around the DCB protocols themselves but also around how upper level protocols might want to use the DCB layer.

This brings us nicely to a very critical point – like most things in this area, DCBX purely works at the link level to allow a pair of connected ports (node to switch or switch to switch) to exchange their specific port configuration. This is an important point as in a multi-hop environment you need to keep in mind that every link may successfully complete its DCBX negotiation but unless some higher level intelligence (you) ensures that things are set right on each and every link, you may still not be meeting the needs of an end-to-end traffic flow. Even in a simple case of device-switch-switch-device I could have Fibre Channel over Ethernet (FCoE) negotiated on the first device-switch and last switch-device connection, and nothing configured on the intermediate switch-switch connection – and the two FCoE end points would happily talk to each other thinking that they have end-to-end lossless connectivity. In a more complex scenario let’s also remember that many L2/L3 switches have not just the ability to route between L2 domains, but also have the ability to reclassify traffic from one 802/1p priority to another. For this reason it is often simpler to use DCB to support 8 independent forwarding planes across the data center as this means we can simply configure all ports pretty much identically. I believe the term here around being clever is ‘here be dragons’.

Anyone that has spent a little time with DCB or FCoE will actually know that DCBX doesn’t just help at the level of the layer 2 protocols, but also helps at the level of the actual upper level protocol we care about. Most well known is that DCBX can carry specific exchanges to ensure the correct configuration of DCB to support FCoE and many people may be aware that it can do the same for iSCSI as well. Far less known however is that these two examples of setting up DCB for upper level protocols are in fact just that – examples. DCBX actually has a generic application type-length-value (TLV) format whereby you can specify what you would like for any upper level protocol that can be identified by either Ethertype or IP socket. Thus DCBX has like the rest of DCB been carefully architected to support the full broad needs of I/O and network convergence and not just the needs of storage convergence. DCBX as a protocol allows you to have an NFS Application TLV, an SMB Application TLV, a RDMA over Converged Ethernet or RoCE Application TLV, iWarp Application TLV, an SNMP Application TLV – etc.

A final and very practical point that any article on DCBX needs to cover is that we are in an evolving world and there are multiple different, and indeed incompatible, versions of DCBX available. Just reviewing the common DCB equipment available today you need to consider DCBX 1.0 as used by pre-standards FCoE products, DCBX 1.01 sometimes referred to as the Converged Enhanced Ethernet (CEE) or baseline version as found most commonly on shipping products today, and DCBX IEEE as actually defined in the standards (physically mostly contained within the ETS standard). It is also important to note that while some products have mechanisms to auto discover and select which version of DCBX to use, there is in fact no standard for such mechanisms. In this case the term is I assume ‘caviat emptor – buyer beware’.

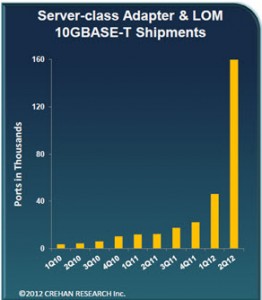

All that said, maybe I should have started this blog reminding everyone that the I/O convergence parts of DCB are not just about allowing storage traffic to be mixed with non-storage traffic without fate sharing problems, but is actually about collapsing the multiple networks of different networks into a single network. I believe the average server is said to have about 6 NICs’ today? As such in the 10GbE and up Ethernet world, the full capabilities of DCBX really are a critical enabler for simplifying the operation of the modern converged virtualized data center.