IT as a Service (ITaaS), or cloud services (clouds), was one of the “buzz” topics at SNW this Spring. And industry groups, such as SNIA’s Cloud Storage Initiative, are beginning to address the standards, policies, and marketing messages that help define what is ITaaS or clouds.

Whatever the definition, “cloud” technologies appear ahead of attempts to describe them. In fact, many customers are deploying cloud solutions today. A few obvious examples include online email and CRM solutions that have been available for several years. Enabling these cloud offerings are technologies that are very complimentary, namely virtualization in its various forms, and Ethernet or IP networks. For this article, I’ll put in a specific plug for iSCSI.

iSCSI plays well in the Cloud

I have described what I think are the top five requirements for cloud deployments and how well iSCSI addresses them in an article published in SNS Europe magazine February 2010. You can read the article online here. I’ll describe these five requirements briefly: Cost, Performance, Security, Scalability, and Data Mobility.

Cost: As a cloud service provider, the cost of goods for your services is essentially your IT infrastructure. Keeping these costs low represents a competitive advantage. One of the ways to reduce cost is to move to higher volume, and therefore lower cost components. Ethernet offers the economies of scale to deliver the lowest cost networking infrastructure both in terms of capital and operating expense associated with its extensive deployment across all industries as well as simplified management.

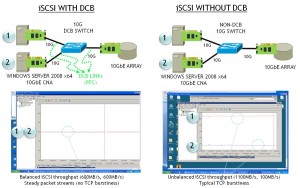

Performance: Your cloud environment needs to scale in performance to meet the demands of a growing customer base. Ethernet offers a variety of means and price points to address performance. Gigabit Ethernet with addition of port bonding or teaming offers simple and cost effective scalability, sufficient for most business applications. 10 Gigabit Ethernet is now being deployed more readily since price points have dropped below the $500 per port range. We’ll soon see 10 Gigabit ports standard on server motherboards, which will offer significant increases in network bandwidth with fewer ports and cables to contend with.

Security: Because Ethernet was developed as a general purpose network, efforts were made to support data security in mixed traffic environments. The TCP/IP stack includes security protocols, such as CHAP and IPSec, to address these requirements. These security protocols extend to storage traffic as well.

Scalability: Scalability can be described in many ways. I have already referred to performance scalability. But, scalability also refers to geography. IP networks span the globe and offer the capabilities needed to address IT services of customers in diverse geographies, which is at the heart of Cloud services. Inherent abilities to route data traffic offer some additional advantages for storage.

Data Mobility: One of the features of IP networks that I believe is particularly well suited for clouds is virtual IP addressing. IP addresses can move from physical port to physical port, allowing you to migrate the network connectivity easily as you migrate other virtual objects, such as virtual servers. As a result, IP based storage protocols, such as iSCSI, are particularly well suited in highly virtualized cloud environments.

IP Networks for the Data Center

As the evolution of the data center continues to deliver dynamic and highly virtualized services, we will see that Ethernet storage networks, including iSCSI, will deliver the value required to make cloud service providers successful. IP networks offer the economics, performance, security, scalability, and mobility required for the current generation and next generation data center.

And for more on this topic, check out this webinar http://www.brighttalk.com/webcast/23778.